What are GPUs and why do they need so much energy?

Understanding the chips powering the AI boom

AI is one of the fastest-growing technologies in history, and power needs are exploding with it. At the center of this surge are Graphics Processing Units (GPUs). Popularized in the early 2000s for video games, these tiny computer chips have recently become the most sought-after pieces of hardware in the world. This week’s newsletter breaks down GPUs, what they’re doing to our power grids, and how it will shape the next decade of data center growth.

What is a GPU?

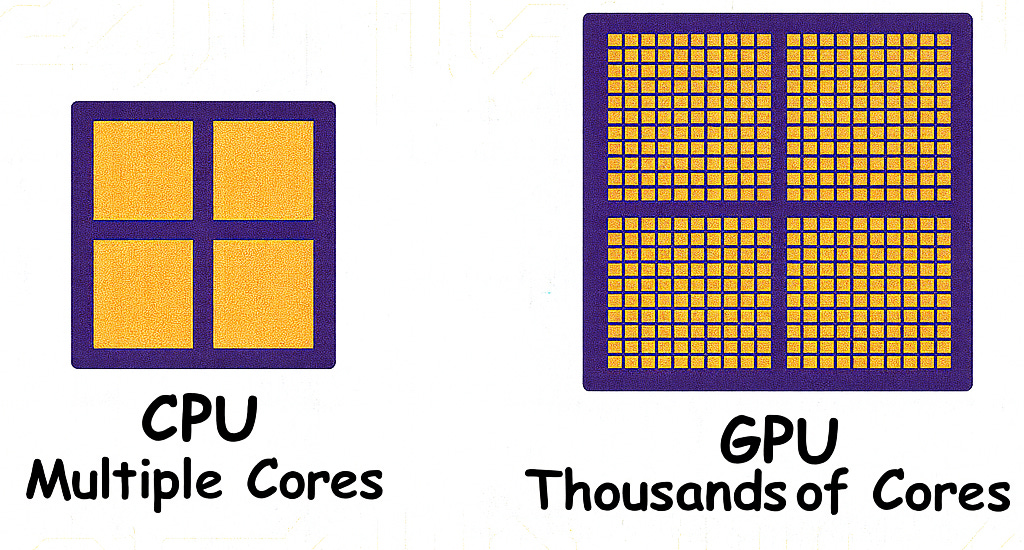

The Central Processing Unit (CPU) is a tiny computer chip that is the “brain” of the computer. It is composed of a few processing cores that allow it to run a few complex tasks at the same time. A processing core is a tiny silicon square covered in microscopic electrical interconnects that can transmit signals.

A GPU is a similar computer chip, the main difference being that it has thousands of processing cores that allow it to run thousands of less complex tasks at the same time. AI works by doing thousands of math problems concurrently, which is why AI companies need GPUs to run their models.

Why are they so power hungry?

GPUs operate at super high clock rates, which means they can execute tasks at hyper speed. This keeps those processing cores busy and leads to a power consumption of 1,000W or more per chip (Nvidia). An AI data center can house hundreds of thousands of chips.

The processing cores also generate a lot of heat when they’re all working at the same time. They require sophisticated cooling systems such as industrial fans and liquid cooling loops. At the end of the day, for every watt that you use to power the GPUs, you’ll need to use another watt on the cooling system (Cerio).

It won’t be long before GPUs with lower energy consumption are introduced. There are many companies and research teams already working on this very problem; the most promising (in my opinion) is Carnegie Mellon’s research on new GPU technology that might make AI workloads up to 20x more energy efficient (CMU). If that research is successful, it could become the new industry standard.

How does this impact our grid?

AI data centers can require as much power as small cities. Data centers are currently using 400-500 terawatt-hours (TWh) of electricity per year around the globe, enough to power all of France. That number is expected to double to almost 1,000 TWh by 2030 and will continue to cause strain on the grid (International Energy Agency).

Utility companies are starting to receive an influx of requests for data center interconnections, and they don’t have enough power to light them all up. Because of this, tech companies are starting to build power plants behind the meter, aka off-grid, to avoid a slow permitting process and the notorious utility interconnection queue, where power generation projects sit in limbo for months or years while they wait for their turn to be evaluated by the utilities’ engineering team.

The good news is that electric utilities will pretty much always prioritize keeping the lights on for their ratepayers, and they aren’t afraid to turn down projects that may put that end goal at risk, no matter how deep the pockets of the requester are.

What does this mean for you?

Utilities and grid operators: Data center load growth is here to stay; be prepared to spend on upgrades to transmission, substations, and peaking resources.

Regulators and policymakers: Do whatever you can to cut approval timelines from years to months, or developers will continue to build behind the meter.

EPCs, manufacturers, and developers: AI data centers should be at the top of your list of priorities moving forward. It is a well-funded and fast-moving industry that prioritizes speed and reliability over cost.

Sources

This week’s top news

🌴The surprising climate upside of AI🌴

⚛️Can Microsoft and Helion win the nuclear fusion race?⚛️

⛽SMR's won't be here until the 2030's, big tech is turning to gas turbines for now⛽